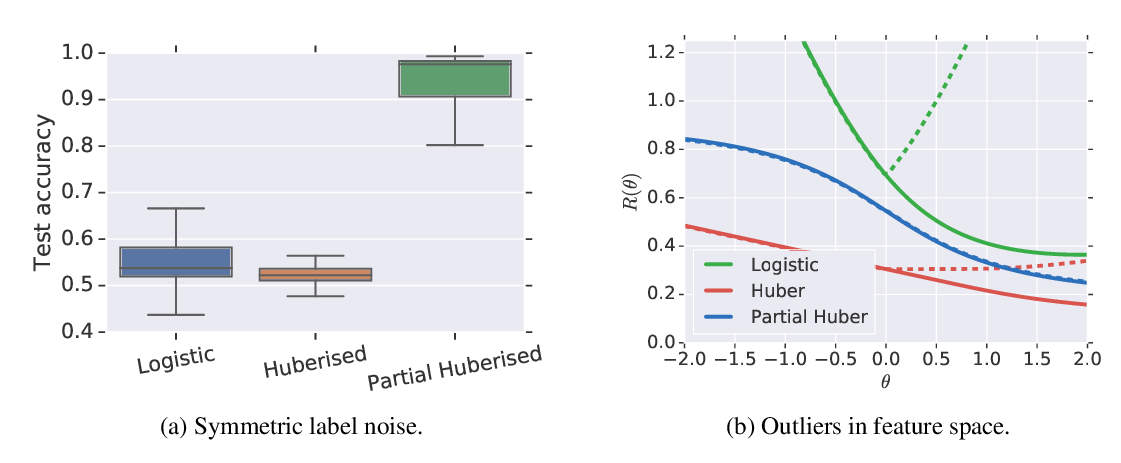

![Daniel Jiwoong Im di Twitter: ""Can gradient clipping mitigate label noise?" A: No but partial gradient clipping does. Softmax loss consists of two terms: log-loss & softmax score (log[sum_j[exp z_j]] - z_y) Daniel Jiwoong Im di Twitter: ""Can gradient clipping mitigate label noise?" A: No but partial gradient clipping does. Softmax loss consists of two terms: log-loss & softmax score (log[sum_j[exp z_j]] - z_y)](https://pbs.twimg.com/media/EWsqtbpUcAIr9d8.jpg)

Daniel Jiwoong Im di Twitter: ""Can gradient clipping mitigate label noise?" A: No but partial gradient clipping does. Softmax loss consists of two terms: log-loss & softmax score (log[sum_j[exp z_j]] - z_y)

Analysis of Gradient Clipping and Adaptive Scaling with a Relaxed Smoothness Condition | Semantic Scholar

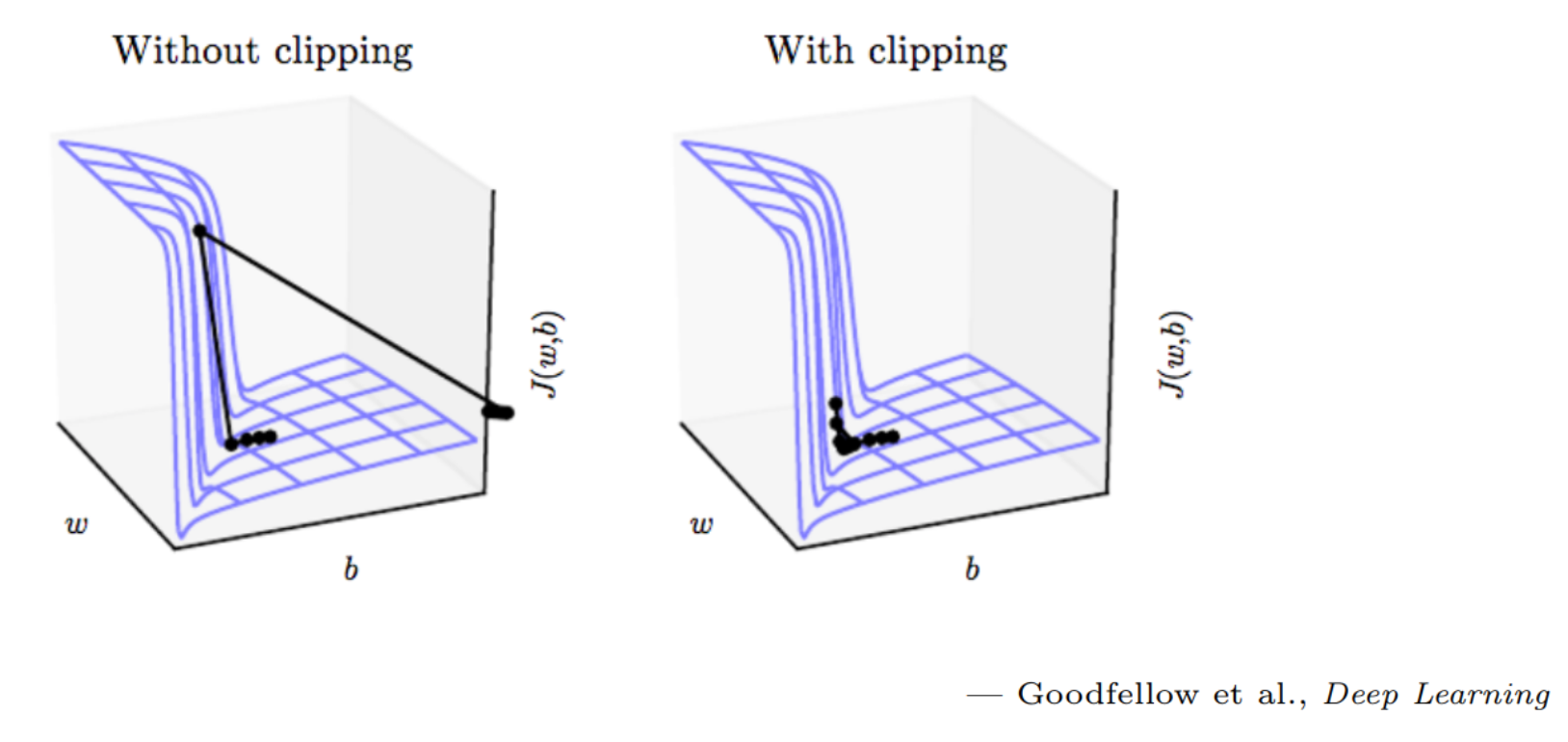

What is Gradient Clipping?. A simple yet effective way to tackle… | by Wanshun Wong | Towards Data Science

What is Gradient Clipping?. A simple yet effective way to tackle… | by Wanshun Wong | Towards Data Science

Analysis of Gradient Clipping and Adaptive Scaling with a Relaxed Smoothness Condition | Semantic Scholar

Allow Optimizers to perform global gradient clipping · Issue #36001 · tensorflow/tensorflow · GitHub

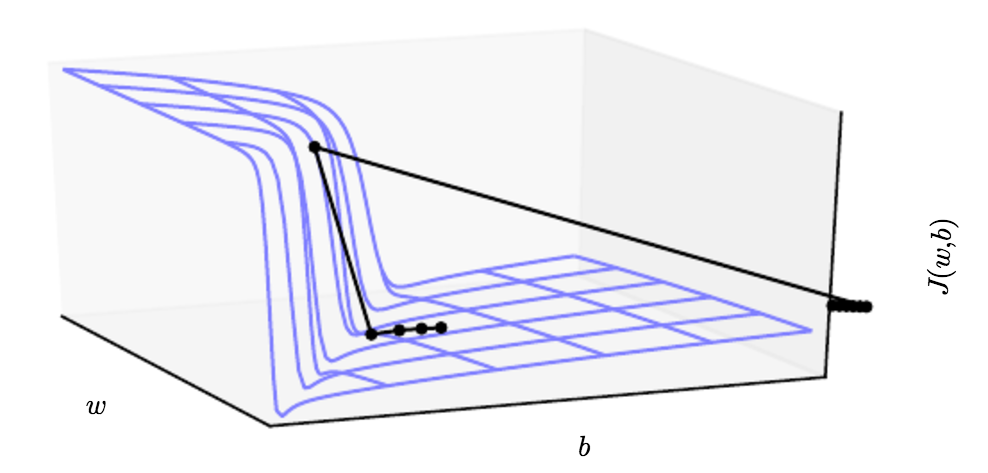

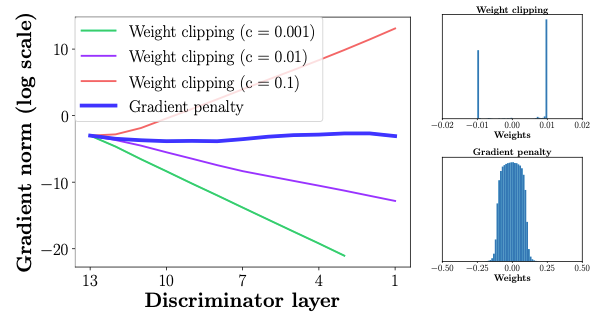

Neural Network Optimization. Covering optimizers, momentum, adaptive… | by Matthew Stewart, PhD Researcher | Towards Data Science

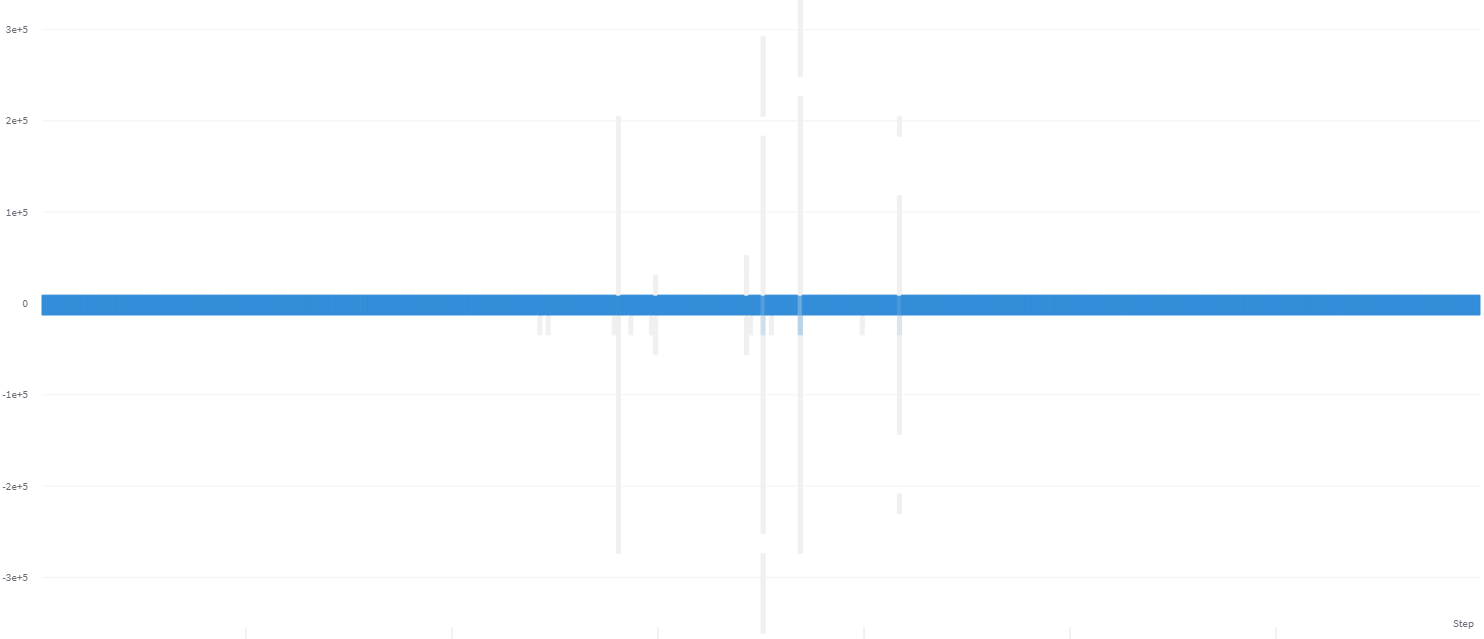

Seq2Seq model in TensorFlow. In this project, I am going to build… | by Park Chansung | Towards Data Science

Stability and Convergence of Stochastic Gradient Clipping: Beyond Lipschitz Continuity and Smoothness: Paper and Code - CatalyzeX

Analysis of Gradient Clipping and Adaptive Scaling with a Relaxed Smoothness Condition | Semantic Scholar

GitHub - sayakpaul/Adaptive-Gradient-Clipping: Minimal implementation of adaptive gradient clipping (https://arxiv.org/abs/2102.06171) in TensorFlow 2.

Keras ML library: how to do weight clipping after gradient updates? TensorFlow backend - Stack Overflow

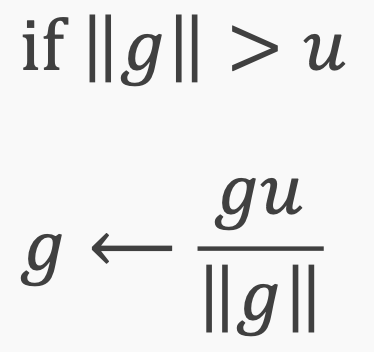

![PyTorch] Gradient clipping (그래디언트 클리핑) PyTorch] Gradient clipping (그래디언트 클리핑)](https://blog.kakaocdn.net/dn/bhDouC/btqJnEUH6N4/cHCdmBGndw51Wu8LV9dFt1/img.png)